Where the Clouds Have No Name: Offline AI at Your Fingertips

Part 1 of a 3-part series to install a large language model on your computer

Chat with an AI on your computer, even without the Internet. For some, an open-source model can be an excellent alternative to a paid AI service. If you can prompt for something using Claude or ChatGPT, you can usually use the same prompt with a local model.

Here are some other benefits of using a local model:

Privacy & Security: Keeps sensitive data on your device, reducing exposure to third parties

Offline Access: Works without an internet connection

Customization: You can fine-tune a model for personalized tasks and preferences

Cost Efficiency: Avoids subscription fees and cloud computing costs

The AI that stays with you

Many companies and open-source groups offer lightweight AI models with capabilities similar to foundational models like ChatGPT, Claude, Gemini, and Copilot. If ChatGPT knows nearly everything about anything, a lightweight model usually knows just enough about most things.

When you download a model, it is nothing but a big file. You do not run a setup program to get it running. Instead, you need a program that can open the file and provide an interface to chat with the AI.

There are thousands of AI models. Some of the more popular models may be from companies you already know, like:

Mistral is a family of solid models from the French company Mistral.ai

Microsoft Phi is a family of lightweight AI models from Microsoft.

Google Gemma is a family of lightweight AI models from Google.

Finding the right AI for you

Have you ever tried to parallel park a family-sized SUV in a bustling city? It just won’t fit. Do you want a small, efficient car to drive within a 5-mile radius for grocery shopping? You don’t need an all-terrain vehicle when a Toyota Prius would do just fine.

Your computer may be loaded with memory to spare or a lightweight, portable computer with limited capacity. That is why different model families exist.

In general, you should think of these models like this:

Smaller = faster, less memory requirements, less knowledge to respond to your prompts

Bigger = slower, more memory requirements, more knowledge to respond to your prompts

A model family typically starts with a small version, around 1GB. The larger the model, the more disk space it requires; some can be 10GB or more.

There are even specialized models. Some are particularly good at vision (reading images, real-time video recognition), whereas others focus on text processing and writing, like Claude. Still others can create images or even videos.

Not all open doors stay open

Just because a model says they are open source does not mean you can download and use it without legal review.

I selected the Mistral 7b model for this article because it uses the permissive Apache 2.0 license.

The popular Google Gemma, Microsoft Phi, and Meta Llama models have restrictions, so use them carefully.

That said, I am not a lawyer and probably got some of this wrong, so please refer to the licenses and consult a lawyer if you are using these models for anything other than personal or hobby use.

Check out my article here to learn more about open-source and domain-specific models.

A direct line to your AI

Let’s say I send you an Excel file and ask you to modify a formula. You can’t just open the file without Excel or a compatible app.

The same is true for local models. You download a big file but need an app to open it so you can chat with the model.

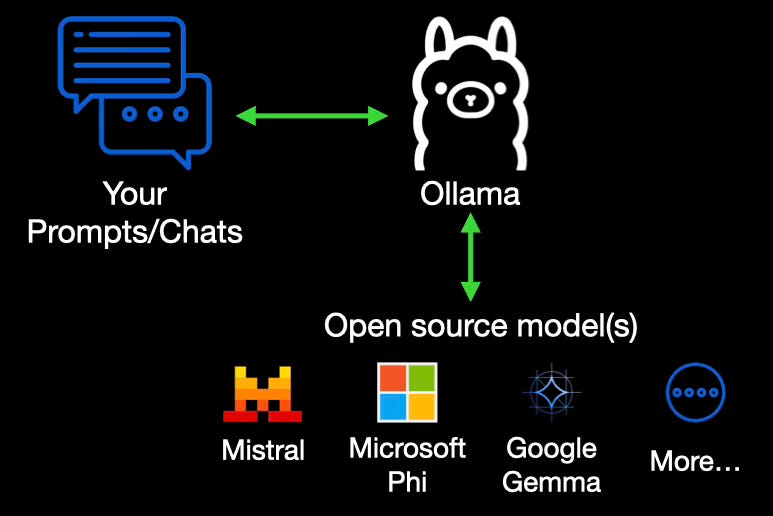

In our scenario, we will use the most popular open-source AI intermediary called Ollama. This product does two things:

Manage models: Ollama allows you to download, run, delete, and update models.

Enable prompt chats with models: You can type your prompt, and Ollama will send it to the model. Then, it will send the response back to you.

One letter apart but worlds away

No. Ollama is an open-source project that connects you to most so-called “open” large language models (LLMs). Meta (Facebook, Instagram, etc.) may or may not be involved in this project, but they are unrelated. It uses a permissive MIT license. Did I mention you should talk to a legal person, not me yet? If so, remember I am not a licensing expert.

No buttons, just a prompt

No. That is why this is a multi-part article. In this article, I set you up for success by setting up Ollama, downloading a local model, and testing it.

In part 2 of this three-part article, I will show you how to use another open-source product that will be very familiar to you because its interface resembles ChatGPT, Claude, and Gemini.

Getting there, step by step

You will be installing open-source software on your computer. I use the process below, but if you use a work computer, please talk to your IT representative first.